DevelopmentNow’s Design Week Portland Open House

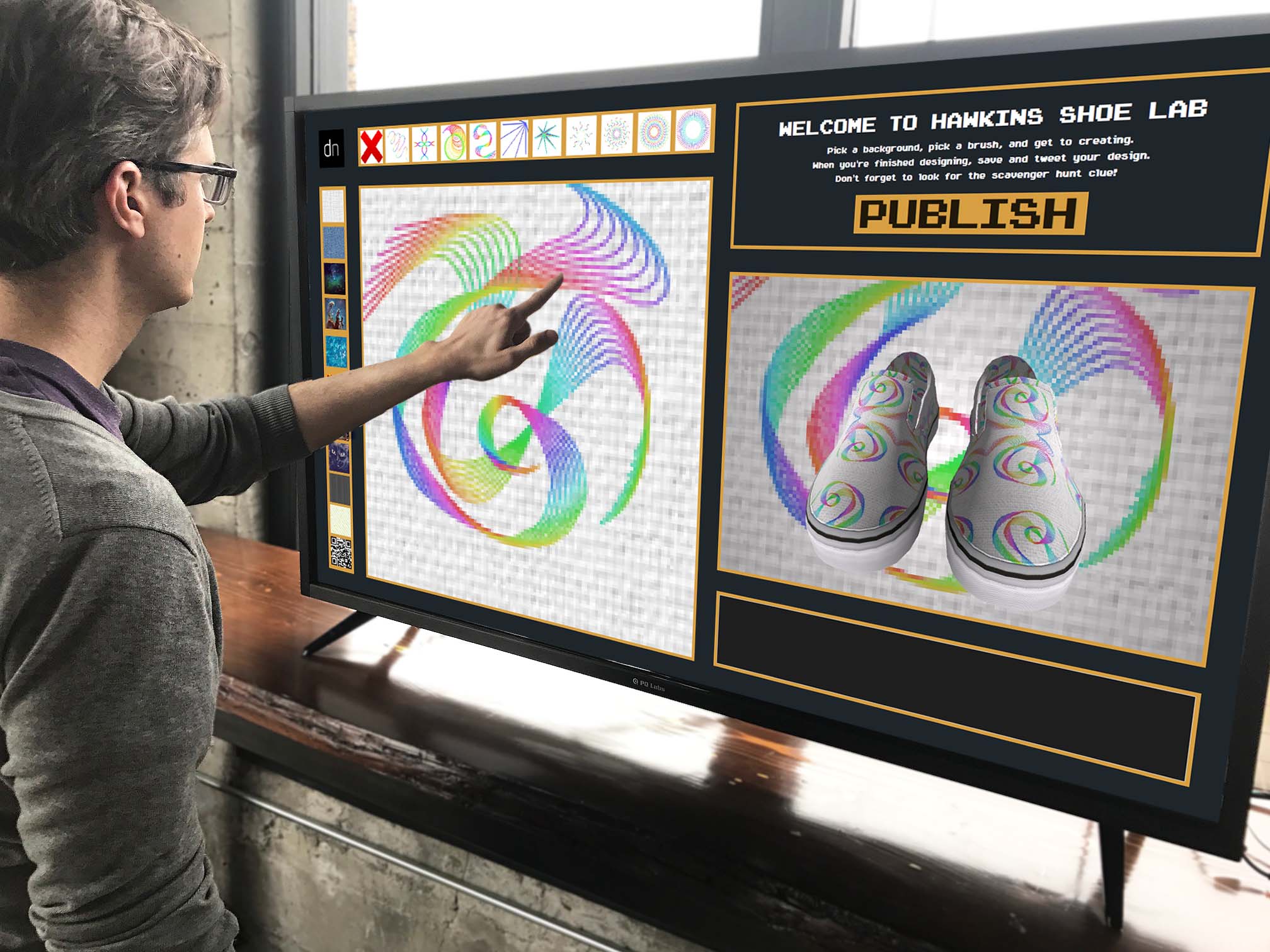

Hawkins Shoe Lab

Hawkins Shoe Lab has great implications in the world of retail. Right now, user customization takes place on-line and is usually limited to what the companies’ production facilities can manufacture, and even after completing your limited design, it takes 4-6 weeks to receive your product. Now, imagine being in-store and being able to have unlimited design possibilities. Pair this with 3D printing and the whole customization experience will be transformed. We’re thinking forward with this technology and hope to see interactive touch-screen display units running this real-time design application in stores in the near future.

Utilizing Multi-Touch gestures with pre-loaded brushes, colors, and patterns, the user can take a plain shoe and create, in real-time, their own design. The design is reflected on the 3D-rendered shoe right next to the canvas. Our display had a collection of brushes using rainbow colors – but the color palette is customizable as are the brush behaviors.

Hardware

PQLabs G4 Touchscreen Overlay

Software

SceneKit and PQLabs Touchscreen SDK

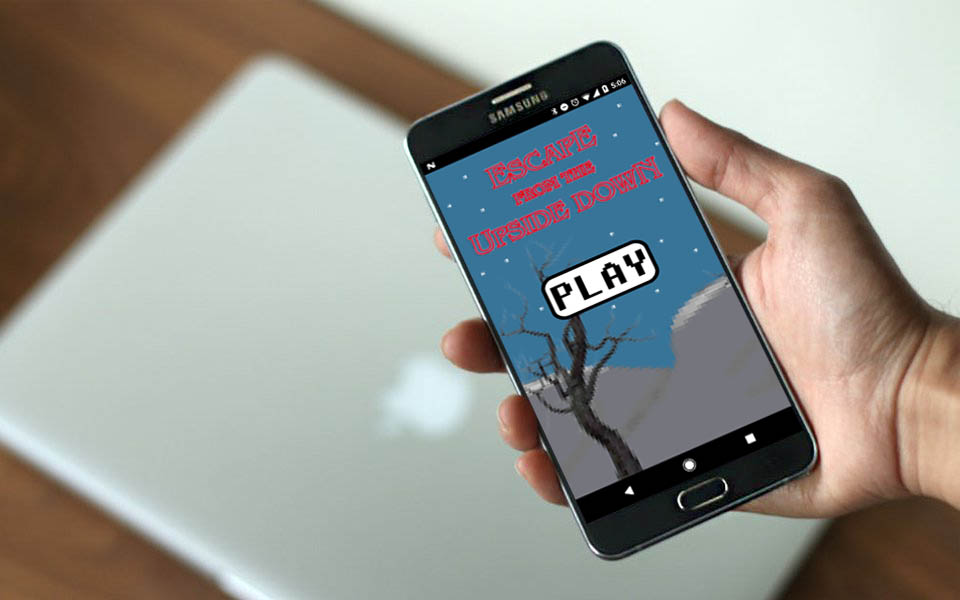

Escape from the Upside Down

How can you get groups of strangers to immediately connect while making them laugh at one another? Make them control a mobile game with their face. This project was an exploration in facial recognition software and the possibilities of its applications. While facial recognition is most usually thought of as another biometric security measure, the use cases don’t stop there. Our game controls were pretty rudimentary, you either had to smile or not smile but with progress in facial recognition the interface could become more sensitive. This could mean leaps in accessibility issues. And with emotion recognition, brands could receive instant feedback based on user emotions.

Hardware

Android Tablet

Software

Google Vision API and Android SDK

Whack a Waffle:

We quickly determined who were the competitive folks of our Design Week attendees with this installation. Our take on “Whack-A-Mole” used old recycled android devices and Google’s Nearby Connections API which allowed the devices to communicate to one another, seamlessly, without needing to rely on Bluetooth technology. Whether using old devices or new, this technology can be used for real-time collaboration, shared experiences, decentralized social networks, video games, live events, and more.

Hardware

Recycled old Androids

Software

Google Nearby Connections API and Android SDK

Project 11:

Project 11 was built to showcase the capabilities of Artificial Intelligence, initially with a front-end application, and with voice recognition in version 2. We built a “Stranger Things” inspired board in which the AI Chatbot would receive the user’s question (either via text using the mobile web app or via voice recognition in V2) then spell out the response via the addressable LED lights, each light corresponding to a letter of the alphabet.

We see this having a multitude of real-life applications. Obviously, we went with an immersive experience using a pop-culture reference. Something similar could be used for TV/Film promotion or even live events. This could also be used for real-time customer service either in stores, hotels, museums, or anywhere where a consumer might have questions. The output doesn’t have to spelled out letter by letter. It could be an avatar speaking back their response. In fact, this technology could be input into a roaming robot. Our AI dreams are coming true!

Hardware

RaspberriPi 3, Addressable LED lights, and FadeCandy USB controller

Software

FC Server, Node.js, RESTful API, Java RESTful API, Java WebSocket Server, and ReactJS Web App

PixelStick:

While we didn’t build/code this apparatus, we can see this being used for augmented reality projects. Whether you’re a pro or a total noob, it takes long exposure photography to another level. You can upload any image you want (in the correct format) to the PixelStick then each one of pixelstick’s 200 LEDs acts like a pixel on a screen, displaying your image one vertical line at a time as you walk. These vertical lines, when captured by a long exposure, combine to recreate your image in mid air, leaving pixelstick (and the person using it) invisible.

Here are some of our favorite images we captured during our event. Check out the entire album here.

Thanks to all the folks who came out to check out our open house. We hope everyone had as much fun exploring these projects as we did building them. Look forward to seeing you next year!