One of our ongoing internal projects this summer is a rebuild of BrightPot, originally built as a DevelopmentNow Labs project, born after the painful memories of a series of deceased office ferns. The team was interested in the idea of what a “smart garden” would look like, and the idea of utilizing technology like Augmented Reality to make more informed decisions in real life.

The original BrightPot included using programmed LED lights that changed color based on the reading from a soil moisture sensor. Our updates since version 1 include using Android Things, Raspberry Pi 3, and introducing a companion mobile app for the user to view real-time sensor readings. In addition, we wanted to integrate the power of Augmented Reality into our project, therefore, I tasked myself with finding a way to overlay valuable plant health information onto the BrightPot surface. Inspired by Tomagotchi pets, we decided against simply showing sensor readings, and wanted to include facial expressions to give BrightPot a more emotional connection with its user.

Below, I give a brief overview of the process I went through to incorporate AR into BrightPot.

Many of the awesome AR examples circulating right now are produced by large companies with lot of time, money and resources, so I found it very important to quickly figure out more practical applications and start sorting out processes that are accessible to me as a developer.

Augmented Reality’s Two Major Components – Tracking and Rendering

Tracking often refers to using image processing and analysis to locate and identify relevant visual features, but it can also mean the use of GPS or other physical sensors like gyroscopes and accelerometers to track the physical location of the user. Often, it incorporates both. True three-dimensional Augmented Reality requires a lot of tracking in order to simulate depth and to track the user’s relative position as they walk around a projected object. For BrightPot, the visual feature that I needed to track was simply the visible surface of a plant pot.

Rendering is the “easier” part – this is when the desired graphic is drawn on top of the camera feed. There are some additional challenges introduced when rendering on top of a live camera feed. It is usually necessary to “smooth” the output so it doesn’t jerk around with tiny movements (humans are very shaky camera operators) and the rendering also has to keep up with the frame rate of the incoming camera feed.

I decided to use the open source computer vision library OpenCV to handle the tracking for my AR feature. I started by exploring one of the most useful methods that OpenCV provides for image analysis – contour detection. Contours are basically outlines of objects or regions as detected by some image analysis algorithm.

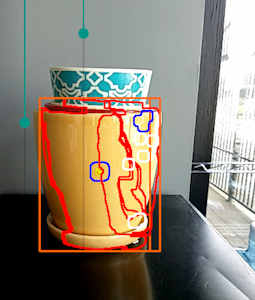

Example showing contours for AR use that were found in the environment with OpenCV- a key feature allowing AR to “see”

Example showing contours for AR use that were found in the environment with OpenCV- a key feature allowing AR to “see”

Using contour detection, I was able to find BrightPot – if it was the only object in the field of vision. I briefly attempted to analyze the found shapes, but unfortunately a plant pot is not very unique. We see examples of image recognition all the time that are able to identify varied objects in complicated scenes – but these tools all incorporate AI on some level. This kind of image recognition is probably overkill in a lot of applications.

Using Markers to Enhance Accuracy and Precision

Luckily, there is a strategy in AR that is a perfect fix for this issue – markers. A marker is a known visual feature that can be used to quickly identify a relevant surface. For example, the Vuforia SDK uses markers for to orient the ‘ground’ plane on which to project three dimensional models. The first marker I used was a small smiley face sticker stuck to the surface of the plant pot. I located this sticker by filtering the input image to only show pixels falling within a set hue range, finding all the contours on that binary output image and then looking for the most circular one. This part worked pretty well as long as there weren’t other circular things around, but I still needed to back up a step and actually find the the boundaries of the pot. This worked out OK, but turned out to be difficult to do consistently, and since it was a two-step operation, I started running into performance issues.

The orange bounding box here is correct, but this method is way over-complicated and couldn’t keep up with the camera frame rate

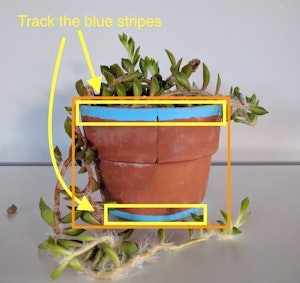

I fixed this by rethinking the design of my marker – if I could simultaneously identify the marker and the boundaries of the object that it was marking, that would be ideal. So, I painted two stripes on a standard plant pot – one at the top rim and one along the bottom edge. I used the same color filtering to find these areas which conveniently also give enough information to determine a reasonable bounding rectangle on which to position the overlay.

Much easier! The blue stripes help identify the object and also determine the general shape of the area where we want to place the overlaid information

This worked much better. In addition to providing more information about the boundaries of the plant pot, the blue stripes were a bigger target than the sticker and also had the added bonus of being visible from all angles. Even when the stripe is partially obscured by the plant, another pot or some bright light or shadow, there is enough information to find a reasonable bounding box in most cases.

BrightPot now has the ability to communicate via data and expression to tell me how it’s doing

Harnessing the Power of Simple Computer Vision and AR

There is still a lot of room for refinement and optimization, but by going through this process I’ve started to get a handle on the tasks and possible solutions involved in this kind of simple computer vision/AR. For example, my current strategy is not going to work against blue striped wallpaper, but now I can see ways that I could start tackling that edge case and others. It’s exciting to work with emerging technologies for this reason.

DevelopmentNow is committed to investing time and resources to make sure our developers are experienced and knowledgeable with emerging technology. We’d love to meet with you to see how Augmented Reality technology can help your business or be included in your service offerings – Contact Us!

For more information about BrightPot, click here.